This is part 2 of a two part post on provisioning IoT devices to Azure IoT Hub via the Azure IoT Device Provisioning Service (DPS) via its REST API. Part 1 described the process for doing it with x.509 certificate attestation from devices and this part will describe doing it with Symmetric Key attestation.

I won’t repeat all the introduction, caveats, etc. that accompanied part 1, but you may want to take a quick peek at them so you know what I will, and will not, be covering here.

If you don’t fully understand the Symmetric Key attestation options for DPS, I recommend you go read the docs here first and then come back…

Ok, welcome back!

So let’s just jump right in. Similarly to part 1, there will be a couple of sections of ‘setup’, depending on whether you choose to go with Individual Enrollments or Group Enrollments in DPS. Once that is done, and the accompanying attestation tokens are generated, the actual API calls are identical between the two.

Therefore, for the first two sections, you can choose the one that matches your desired enrollment type and read it for the required setup (or read them both if you are the curious type), then you can just jump to the bottom section for the actual API calls.

But, before we start with setup, there’s a little prep work to do.

Prep work (aka – how do I generate the SAS tokens?)

Symmetric Key attestation in DPS works, just like pretty much all the rest of Azure, on the concept of SAS tokens. In Azure IoT, these tokens are typically derived from a cryptographic key tied to a device or service-access level. As mentioned in the overview link above (in case you didn’t read it), DPS have two options for these keys. One option is an individual key per device, as specified or auto-generated in the individual enrollments. The other option is to have a group enrollment key, from which you derive a device-specific key, that you leverage for your SAS token generation.

Generating SAS tokens

So first, let’s talk about and prep for the generation of our SAS tokens, independent of what kind of key we use. The use of, and generation of, SAS tokens is generally the same for both DPS and IoT Hub, so you can see the process and sample code in various languages here. For my testing, I pretty much shamelessly stole re-used the python example from that page, which I slightly modified (to actually call the generate_sas_token method).

from base64 import b64encode, b64decode

from hashlib import sha256

from time import time

from urllib import quote_plus, urlencode

from hmac import HMAC

def generate_sas_token(uri, key, policy_name, expiry=3600):

ttl = time() + expiry

sign_key = “%s\n%d” % ((quote_plus(uri)), int(ttl))

print sign_key

signature = b64encode(HMAC(b64decode(key), sign_key, sha256).digest())

rawtoken = {

‘sr’ : uri,

‘sig’: signature,

‘se’ : str(int(ttl))

}

if policy_name is not None:

rawtoken[‘skn’] = policy_name

return ‘SharedAccessSignature ‘ + urlencode(rawtoken)

uri = ‘[resource_uri]’

key = ‘[device_key]’

expiry = [expiry_in_seconds]

policy=’[policy]’

print generate_sas_token(uri, key, policy, expiry)

the parameters at the bottom of the script, which I hardcoded because I am lazy busy, are as follows:

- [resource_uri] – this is the URI of the resource you are trying to reach with this token. For DPS, it is of the form ‘[dps_scope_id]/registrations/[dps_registration_id]’, where [dps_scope_id] is the scope id associated with your DPS instance, found on the overview blade of your DPS instance in the Azure portal, and [dps_registration_id] is the registration_id you want to use for your device. It will be whatever you specified in an individual enrollment in DPS, or can be anything you want in a group enrollment as long as it is unique. Frequently used ideas here are combinations of serial numbers, MAC addresses, GUIDs, etc

- [device_key] is the device key associated with your device. This is either the one specified or auto-generated for you in an individual enrollment, or a derived key for a group enrollment, as explained a little further below

- [expiry_in_seconds] the validity period of this SAS token in sec… ok, not going to insult your intelligence here

- [policy] the policy with which the key above is associated. For DPS device registration, this is hard coded to ‘registration’

So an example set of inputs for a device called ‘dps-sym-key-test01’ might look like this (with the scope id and key modified to protect my DPS instance from the Russians!)

uri = ‘0ne00057505/registrations/dps-sym-key-test01’

key = ‘gPD2SOUYSOMXygVZA+pupNvWckqaS3Qnu+BUBbw7TbIZU7y2UZ5ksp4uMJfdV+nTIBayN+fZIZco4tS7oeVR/A==’

expiry = 3600000

policy=’registration’

Save the script above to a *.py file. (obviously, you’ll need to install python 2.7 or better if you don’t have it to run the script)

If you are only doing individual enrollments, you can skip the next section, unless you are just curious.

Generating derived keys

For group enrollments, you don’t have individual enrollment records for devices in the DPS enrollments, so therefore you don’t have individual device keys. To make this work, we take the enrollment-group level key and, from it, cryptographically derive a device specific key. This is done by essentially hashing the registration id for the device with the enrollment-group level key. The DPS team has provided some scripts/commands for doing this for both bash and Powershell here. I’ll repeat the bash command below just to demonstrate.

KEY=[group enrollment key]

REG_ID=[registration id]

keybytes=$(echo $KEY | base64 –decode | xxd -p -u -c 1000)

echo -n $REG_ID | openssl sha256 -mac HMAC -macopt hexkey:$keybytes -binary | base64

where [group enrollment key] is the key from your group enrollment in DPS. this will generate a cryptographic key that uniquely represents the device specified by your registration id. We can then use that key as the ‘[device_key]’ in the python script above to generate a SAS key specific to that device within the group enrollment.

Ok – enough prep, let’s get to it. The next section shows the DPS setup for an Individual Enrollment. Skip to the section beneath it for Group Enrollment.

DPS Individual Enrollment – setup

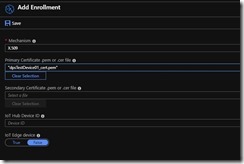

The setup for an individual device enrollment for symmetric key in DPS is pretty straightforward. Navigate to the “manage enrollments” blade from the left nav underneath your DPS instance and click “Add Individual Enrollment”. On the ‘Add Enrollment’ blade, for Mechanism, choose “Symmetric Key”, then below, enter in your desired registration Id (and an option device id for iot hub if you want it to be different). It should look similar to the below (click on the pic for a bigger version).

Click Save. Once saved, drill back into the device and copy the Primary Key and remember your registration id, we’ll need both later.

That’s it for now. You can skip to the “call DPS REST APIs” section below, or read on if you want to know how to do this with a group enrollment.

DPS Group Enrollment – setup

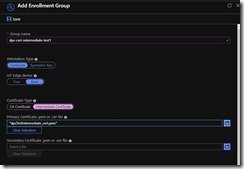

The setup for an group enrollment for symmetric key is only slightly more complicated than individual. On the portal side, it’s fairly simple. In the Azure portal, under your DPS instance, on the left nav click on ‘manage enrollments’ and then “Add Group Enrollment”. On the Add Enrollment page, give the enrollment a meaningful name and set Attestation Type to Symmetric Key, like the screenshot below.

Once you do that, click Save, and then drill back down into the enrollment and copy the “Primary Key” that got generated. This is the group key referenced above, from which we will derive the individual device keys.

In fact, let’s do that before the next section. Recall the bash command given above for deriving the device key, below is an example using the group key from my ‘dps-test-sym-group1’ group enrollment above and I’ll just ‘dps-test-sym-device01’ as my registration id

You can see from the picture that the script generated a device-specific key (by hashing the registration id with the group key).

Just like with the individual enrollment above, we now have the pieces we need to generate our SAS key and call the DPS registration REST APIs

call DPS REST APIs

Now that we have everything setup, enrolled, and our device-specific keys ready, we can set up to call the APIs. First we need to generate our SAS tokens to authenticate. Plug in the values from your DPS instance into the python script you saved earlier. For the [device key] parameter, be sure and plug in either the individual device key you copied earlier, or for the group enrollment, make sure and use the derived key you just created and not the group enrollment key.

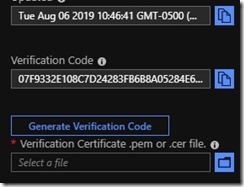

Below is an example of a run with my keys, etc

the very last line is the one we need. In my case, it was (with a couple of characters changed to protect my DPS):

SharedAccessSignature sr=0ne00052505%2Fregistrations%2Fdps-test-sym-device01&skn=registration&sig=FKOnylJndmpPYgJ5CXkw1pw3kiywt%2FcJIi9eu4xJAEY%3D&se=1568718116

So we now have the pieces we need for the API call.

The CURL command for the registration API looks like this (with the variable parts bolded).

curl -L -i -X PUT -H ‘Content-Type: application/json’ -H ‘Content-Encoding: utf-8’ -H ‘Authorization: [sas_token]‘ -d ‘{“registrationId”: “[registration_id]“}’ https://global.azure-devices-provisioning.net/[dps_scope_id]/registrations/[registration_id]/register?api-version=2019-03-31

where

- [sas_token] is the one we just generated

- [dps_scope_id] is the one we grabbed earlier from the azure portal

- [registration_id] is the the one we chose for our device.

- the –L tells CURL to follow redirects

- -i tells CURL to output response headers so we can see them

- -X PUT makes this a put command

- -H ‘Content-Type: application/json’ and –H ‘Content-Encoding: utf-8’ are required and tells DPS we are sending utf-8 encoded json in the body (change the encoding to whatever matches what you are sending)

Above is an example of my call and the results returned.

Note two things.. One is the operationId. DPS enrollment in an IoT Hub is a (potentially) long running operation, and thus is done asynchronously. So to see the status of your IoT Hub provisioning, we’ll need to poll for status. I’ll get to that in a minute. The second thing is the “status” field, which begins in the ‘assigning’ status.

The next API call we need to make is get the status. You’ll basically do this in a loop until you either get a success or failure status. The valid status values for DPS are:

- assigned

– the return value from the status call will indicate what IoT Hub the device was assigned to - assigning

- disabled

– the device enrollment record is disabled in DPS, so we can’t assigned - failed

– assignment failed. There will be an errorCode and errorMessage returned in an registrationState record in the returned JSON to indicate what failed. - unassigned – ummm.. no clue.

To make the afore-mentioned status call, you need to copy the operationId from the return status above. The CURL command for that call is (with variables bolded):

curl -L -i -X GET -H ‘Content-Type: application/json’ -H ‘Content-Encoding: utf-8’ -H ‘Authorization: [sas_token]’ https://global.azure-devices-provisioning.net/[dps_scope_id]/registrations/[registration_id]/operations/[operation_id]?api-version=2019-03-31

use the same sas_token and registration_id as before and the operation_id you just copied.

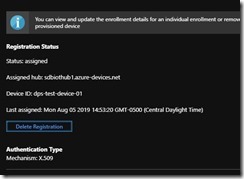

A successful call looks like this:

Unfortunately, I’m not a fast enough copy/paste-r to catch it in a status other than ‘assigned’ (DPS is just too fast for me). But you can try this all programmatically or in a script to do it.

Viola

That’s it. Done. You can check the status of your registration in the azure portal and see that the device was assigned.

enjoy, and as always, if you have questions or even suggested topics (remember, it has to be complex, technical, and not well covered in the docs), hit me up in the comments